DocQA Generator

Last Update: 05/02/2025Overview

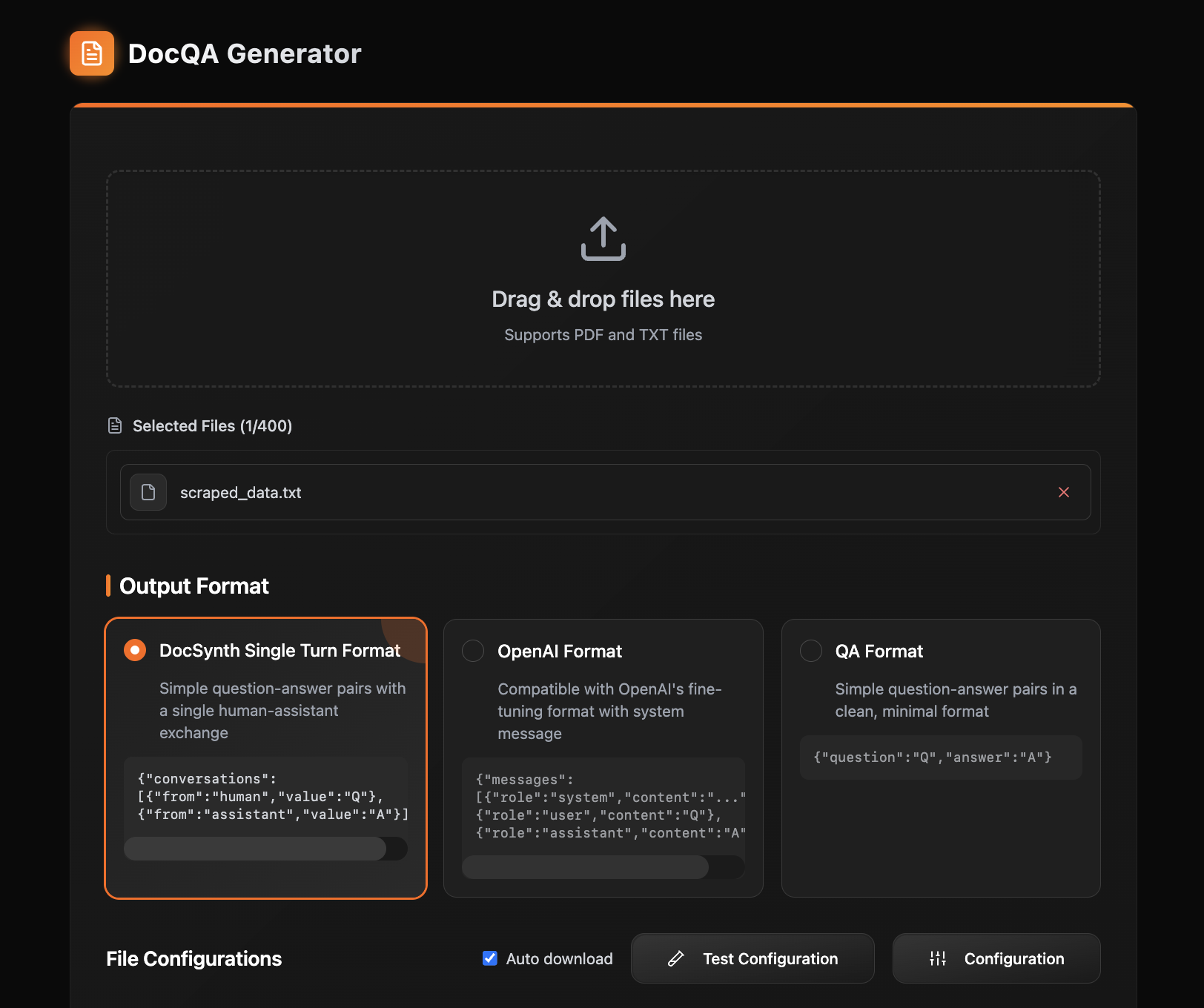

Easily upload or drag and drop multiple PDF and/or text files to automatically generate question-answer (QA) pairs. Choose from the following output formats based on your use case.

DocSynth Single-Turn

Generates simple single-turn question-answer exchanges between a human and an assistant.

Format: json

{

"conversations": [

{"from": "human", "value": "Q"},

{"from": "assistant", "value": "A"}

]

}QA Format

Provides clean, minimalistic question-answer pairs suitable for lightweight applications.

Format: json

{

"question": "Q",

"answer": "A"

}OpenAI Format

Generates data compatible with OpenAI's fine-tuning requirements, including an optional system prompt.

Format: json

{

"messages": [

{"role": "system", "content": "..."},

{"role": "user", "content": "Q"},

{"role": "assistant", "content": "A"}

]

}File Configuration

Customize how DocSynth generates question-answer (QA) pairs by configuring the following parameters:

Chunk Size

Default: 1900 words

Splits the input file into segments, with each chunk containing the specified number of words. This controls the context window for question generation.

Questions per Chunk

Default: 3

Defines how many QA pairs should be generated from each chunk. Increasing this number can yield more granular coverage of the source material.

Generation Count

Default: 1

Specifies how many separate JSONL files containing QA pairs will be generated from a single input file. Useful for creating multiple versions or batches of QA data.

Temperature

Default: 0.7

Controls the randomness and creativity of the generated QA pairs.

- Lower values (e.g., 0.2–0.4) yield more deterministic and focused outputs.

- Higher values (e.g., 0.8–1.0) produce more diverse and creative questions.

System Prompt

Provides high-level instructions to guide the generation process.

Use this field to define the tone, style, or focus of the QA pairs.

For example:

"Generate clear, concise questions suitable for high school students reviewing biology topics."

(OpenAI Format) System Message

Specifically configures the system role in the OpenAI-compatible output format. This message serves as a foundational instruction for how the assistant should behave when generating QA pairs.

Example Output:

{

"messages": [

{

"role": "system",

"content": "Generate concise and factual QA pairs based on the provided document, focusing on key concepts."

},

{

"role": "user",

"content": "Q"

},

{

"role": "assistant",

"content": "A"

}

]

}You can reference the general System Prompt here for consistency or provide unique, format-specific instructions tailored to fine-tuning and API compatibility.